Scala is a hybrid functional/imperative object/actor language for the Java Virtual Machine which has recently gained notoriety by Twitter selecting it as the basis of their future development. My language Reia is also a hybrid functional/imperative object/actor language. You may think given these similarities I would like Scala... but I don't.

I originally tried Scala back in 2007, shortly after I started becoming proficient in Erlang. I was extremely interested in the actor model at the time, and Scala provides an actor model implementation. Aside from Erlang, it was one of the only languages besides Scheme and Io I had discovered which had attempted an actor model implementation, and Scala's actor support seemed heavily influenced by Erlang (even at the syntactic level). I was initially excited about Scala but slowly grew discontented with it.

At first I enjoyed being able to use objects within the sequential portions of my code and actors for the concurrent parts. This is an idea I would carry over into Ruby with

Revactor library, an actor model implementation I created for Ruby 1.9. Revactor let you write sequential code using normal Ruby objects, while using actors for concurrency. Like Scala, Revactor was heavily influenced by Erlang, to the point that I had created an API almost virtually identical translation of Erlang's actor API to Ruby as

MenTaLguY had in his actor library called

Omnibus. MenTaLguY is perhaps the most concurrency-aware Ruby developer I have ever met, so I felt I may be on to something.

However, rather quickly I discovered something about using actors and objects in the same program: there was considerable overlap in what actors and objects do. More and more I found myself trying to reinvent objects with actors. I also began to realize that Scala was running into this problem as well. There were some particularly egregious cases. What follows is the most insidious one.

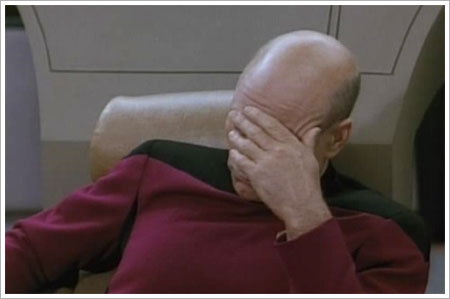

The WTF Operator

One of the most common patterns in actor-based programs is the Remote Procedure Call or RPC. As the actor protocol is asynchronous, RPCs provide synchronous calls in the form of two asynchronous messages, a request and a response.

RPCs are extremely prevalent in actor-based programming, to the point that

Joe Armstrong, creator of Erlang, says:

95% of the time standard synchronous RPCs will work - but not all the

time, that's why it's nice to be able to open up things and muck around at the

message passing level.

Seeing RPCs as exceedingly common, the creators of Scala created an operator for it: "

!?"WTF?! While it's easy to poke fun at an operator that resembles an interrobang, the duplicated semantics of this operator are what I dislike. To illustrate the point, let me show you some Scala code:

response = receiver !? requestand the equivalent code in Reia:

response = receiver.request()Reia can use the standard method invocation syntax because in Reia, all objects are actors. Scala takes an "everything is an object" approach, with actors being an additional entity which duplicates some, but not all, of the functions of objects. In Scala, actors are objects, whereas in Reia objects are actors.

Scala's object model borrows heavily from Java, which is in turn largely inspired by C++. In this model, objects are effectively just states, and method calls (a.k.a. "sending a message to an object") are little more than function calls which act upon and mutate those states.

Scala also implements the actor model, which is in turn inspired by Smalltalk and its messaging-based approach to object orientation. The result is a language which straddles two worlds: objects acted upon by function calls, and actors which are acted upon by messages.

Furthermore, Scala's actors fall prey

Clojure creator Rich Hickey's concerns about actor-based languages:

It reduces your flexibility in modeling - this is a world in which everyone sits in a windowless room and communicates only by mail. Programs are decomposed as piles of blocking switch statements. You can only handle messages you anticipated receiving. Coordinating activities involving multiple actors is very difficult. You can't observe anything without its cooperation/coordination - making ad-hoc reporting or analysis impossible, instead forcing every actor to participate in each protocol.

Reia offers a solution to this problem with its objects-as-actors approach: all actor-objects speak a common protocol, the "Object" protocol, and above that, they speak whatever methods belong to their class. Objects implicitly participate in the same actor protocol, because they all inherit the same behavior from their common ancestor.

Scala's actors... well... if you

!? them a message they aren't explicitly hardcoded to understand (and yes nitpickers, common behaviors can be abstracted into functions) they will ?! at your message and ignore it.

Two kinds of actors?

One of the biggest strengths of the Erlang VM is its approach to lightweight concurrency. The Erlang VM was designed from the ground up so you don't have to be afraid of creating a large number of Erlang processes (i.e. actors). Unlike the JVM, the Erlang VM is stackless and therefore much better at lightweight concurrency. Erlang's VM also has advanced mechanisms for load balancing its lightweight processes across CPU cores. The result is a system which lets you create a large number of actors, relying on the Erlang virtual machine to load balance them across all the available CPU cores for you.

Because the JVM isn't stackless and uses native threads as its concurrency mechanism, Scala couldn't implement Erlang-style lightweight concurrency, and instead compromises by implementing two types of actors. One type of actor is based on native threads. However, native threads are substantially heavier than a lightweight Erlang process, limiting the number of thread-based actors you can create in Scala as compared to Erlang. To address this limitation, Scala implements its own form of lightweight concurrency in the form of event-based actors. Event-based actors do not have all the functionality of thread-based actors but do not incur the penalty of needing to be backed by a native thread.

Should you use a thread-based actor or an event-based actor in your Scala program? This is a case of implementation details (namely the JVM's lack of lightweight concurrency) creeping out into the language design. Projects like

Kilim are trying to address lightweight concurrency on the JVM, and hopefully Scala will be able to leverage such a project in the future as the basis of its actor model and get rid of the threaded/evented gap, but for now Scala makes you choose.

Scala leaves you with three similar, overlapping constructs to choose from when modeling state, identity, and concurrency in programs: objects, event-based actors, and thread-based actors. Which should you choose?

Reia provides both objects and actors, but actors are there for edge cases and intended to be used almost exclusively by advanced programmers. Reia introduces a number of asynchronous concepts into its object model, and for that reason objects alone should suffice for most programmers, even when writing concurrent programs.

Advantages of Scala's approach

Reia's approach comes with a number of disadvantages, despite the theoretical benefits I've outlined above. For starters, Scala is a language built on the JVM, which is arguably the best language virtual machine available. Scala's sequential performance tops Erlang even if its performance in concurrent benchmarks typically lags behind.

Reia's main disadvantage is that its object model does not work like any other language in existence, unless you consider Erlang's approach an "object model". Objects, being a shared-nothing, individually garbage collected Erlang process, are much heavier (approximately 300 machine words at minimum) than objects in your typical object oriented language (where I hear some runtimes offer zero overhead objects, or something). Your typical "throw objects at the problem" programmer is going to build a system, and the more objects that are involved the more error prone it's going to become. Reia is a language which asks you to sit back for a second and ponder what can be modeled as possibly nested structures of lists, tuples, and maps instead of objects.

Reia does not allow cyclical call graphs, meaning that an object receiving a call cannot call another object earlier in the call graph. Instead, objects deeper in the call graph must interact with any previously called objects asynchronously. If your head is spinning now I don't blame you, and if you do understand what I'm talking about I cannot offer any solutions. Reia's call graphs must be acyclic, and I have no suggestions to potential Reia developers as to how to avoid this problem, besides being aware of the call flow and ensuring that all "back-calls" are done asynchronously. Cyclic call graphs result in a deadlock, one which can presently only be detected through timeouts, and remain a terrible pathological case. I really wish I could offer a better solution and I am eager if anyone can help me find a solution. This is far and away the biggest problem I have ever been faced with in Reia's object model and I am sad to say I do not have a good solution.

All that said, Scala's solution is so beautifully familiar! It works with the traditional OOP semantics of C++ which were carried over into Java, and this is what most OOP programmers are familiar with. I sometimes worry that the approach to OOP I am advocating in Reia will be rejected by developers who are familiar with the C++-inspired model, because Reia's approach is more complex and more confusing.

Furthermore, Scala's object model is not only familiar, it's extremely well-studied and well-optimized. The JVM provides immense capability to inline method calls, which means calls which span multiple objects can be condensed down to a single function call. This is because the Smalltalk-inspired illusion that these objects are receiving and sending messages is completely suspended, and objects are treated in C++-style as mere chunks of state, thus an inlined method call can act on many of them at once as if they were simple chunks of state. In Reia, all objects are concurrent, share no state, and can only communicate with messages. Inlining calls across objects is thoroughly impossible since sending messages in Reia is not some theoretical construct, it's what really happens and cannot simply be abstracted away into a function call which mutates the state of multiple objects. Each object is its own world and synchronizes with the outside by talking by sending other objects messages and waiting for their responses (or perhaps just sending messages to other objects then forgetting about it and moving on).

So why even bother?

Why even bother pursuing Reia's approach then, if it's more complex and slow? I think its theoretical purity offers many advantages. Synchronizing concurrency through the object model itself abstracts away a lot of complexity. Traditional object usage patterns in Reia (aside from cyclical call graphs) have traditional object behavior, but when necessary, objects can easily be made concurrent by using them asynchronously. Because of this, the programmer isn't burdened with deciding what parts of the system need to be sequential and what parts concurrent ahead of time. They don't need to rip out their obj.method() calls and replace them with WTFs!? when they need some part of the system they didn't initially anticipate to be concurrent. Programmers shouldn't even need to use actors directly unless they're implementing certain actor-specific behaviors, like FSMs (the 5% case Joe Armstrong was talking about).

Why build objects on actors?

Objects aren't something I really have a concrete, logical defense of, as opposed to a functional approach. To each their own is all I can say. Object oriented programming is something of a cargo cult... its enthusiasts give defenses which often apply equally to functional programming, especially functional programming with the actor model as in Erlang.

My belief is that if concurrent programming can be mostly abstracted to the level of objects themselves, a lot more people will be able to understand it. People understand objects and the separation of concerns which is supposedly implicit in their identities, but present thread-based approaches to concurrency just toss that out the window. The same problem is present in Scala: layering the actor model on top of an object system means you end up with a confusing upper layer which works kind of like objects, but not quite, and runs concurrently, while objects don't. When you want to change part of the system from an object into an actor, you have to rewrite it into actor syntax and change all your lovely dots into

!?s?!

Building the object system on top of the actor model itself means objects become the concurrency primitive. There is no divorce between objects and actors. There is no divorce between objects, thread-based actors, and event-based actors as in Scala. In Reia objects are actors, speak a common protocol, and can handle the most common use cases.

When objects are actors, I think everything becomes a lot simpler.